Founded in 2004 to help public and private sector companies save money through reverse auctions, the Curtis Fitch Solution has expanded since then to offer a source-to-contract procurement solution, which includes extensive supplier onboarding evaluation, performance management, contract lifecycle management, and spend and performance management. Curtis Fitch offers the following capabilities in its solution.

Supplier Insight

CF Supplier Insights is their supplier registration, onboarding, information, and relationship management solution. It supports the creation and delivery of customized questionnaires, which can be associated with organizational categories anywhere in the 4-level hierarchy supported, so that suppliers are only asked to provide information that the organization needs for their qualification. You can track insurance and key certification requirements, with due dates for auto-reminders, to enable suppliers to self-serve. Supplier Insights offers task-oriented dashboards to help a buyer or evaluator focus in on what needs to be done.

The supplier management module presents supplier profiles in a clear and easy to view way, showing company details, registration audit, location, and contact information, etc.. You can quickly view an audit trail of any activity that the supplier is linked to in CF Suite, including access to onboarding questionnaires, insurance and certification documents, events they were involved in, quotes they provided, contracts that were awarded, categories they are associated with, and balanced scorecards.

When insurance and certifications are requested, so is the associated metadata like coverage, award date, expiry date, and insurer/granter. This information is monitored, and both the buyer and supplier are alerted when the expiration date is approaching. The system defines default metadata for all suppliers, but buyers can add their own fields as needed.

It’s easy to search for suppliers by name, status, workflow stage, and location, or simply scan through them by name. The buyer can choose to “hide” suppliers that have not completed the registration process and they will not be available for sourcing events or contracting.

e-Sourcing

CF eSourcing is their sourcing project management and RFx platform where a user can define event and RFx templates, create multi-round sourcing projects, evaluate the responses using weighted scoring and multi-party ratings, define awards, and track procurement spend against savings. Also, all of the metadata is available for scorecards, contracting, and event creation, so if a supplier doesn’t have the necessary coverage or certification, the supplier can be filtered out of the event, or the buyer can proactively ensure they are not invited.

Events can be created from scratch but are usually created from templates to support standardization across the business. An RFx template can define stakeholders, suppliers (or categories), and any sourcing information, including important documentation. In addition, a procurement workplan can be designed to reflect any sign off gates as necessary when supporting the appropriate public sector requirements some buying organizations must adhere to.

Building RFx templates is easy to do and there’s a variety of question styles available, depending on the response required from the vendor (i.e. free text, multichoice, file upload, financial etc.) RFx’s can be built by importing question sets, linking to supplier onboarding information, or via a template. The tool offers tender evaluation with auto-weighting and scoring functionality (based on values or pre-defined option selections). Their clients’ buyers can invite stakeholders to evaluate a tender and what the evaluator scores can be pre-defined. In addition, when it comes to RFQs for gathering the quotes, it supports total cost breakdowns and arbitrary formulas. Supplier submissions and quotes can be exported to Excel, including any supplier document.

The one potential limitation is that there is not a lot of built in analysis / side-by-side comparison for price analysis in Sourcing, as most buyers prefer to either do their analysis in Excel or use custom dashboards in analytics.

In addition, e-Sourcing events can be organized into projects that can not only group related sourcing events, and provide an overarching workflow, but can also be used to track actuals against the historical baseline and forecasted actuals for a realized savings calculation.

e-Auctions

CF Suite also includes CF Auctions. There are four styles of auction available for running both forward and reverse auctions; English, Sequential, Dutch, and Japanese auctions, which can all be executed and managed in real time. Auctions are easy to define and very easy to monitor by the buying organization as they can see the current bid for each supplier and associated baseline and target information that is hidden from the suppliers, allowing them to track progress against not only starting bids, but goals and see a real-time evaluation of the benefit associated with a bid.

Suppliers get easy to use bidding views, and depending on the settings, suppliers will either see their current rank or distance from lowest bid and can easily update their submissions or ask questions. Buyers can respond to suppliers one-on-one or send messages to all suppliers during the auction.

In addition, if something goes wrong, buyers can manage the event in real time and pause it, extend it, change owners, change supplier reps, and so on to ensure a successful auction.

Contract Management

CF Contracts Contract management enables procurement to build high churn contracts with limited and / or no clause changes, for example, NDAs or Terms of Service. CF Contracts has a clause library, workflow for internal sign off, and integrated redline tracking. Procurement can negotiate with suppliers through the tool, and once a contract has been drafted in CF Suite, the platform can be used to track versions, see redlines, accept a version for signing, and manage the e-Signature process. If CF Suite was used for sourcing, then if a contract is awarded off the back of an event, the contract can be linked with the award information from the sourcing module.

Most of their clients focus on using contracts as a central contract repository database to improve visibility of key contract information, and to feed into reporting outputs to support the management of the contract pipeline, including contract spend and contract renewals.

The contract database includes a pool of common fields (i.e. contract title, start and end dates, contract values etc.) and their clients can create custom fields to ensure the contract records align with their business data. Buyers can create automated contract renewal alerts that can be shared with the contract manager, business stakeholders or the contract management team, as one would expect from a contract management module.

Supplier Scorecards

CF Scorecards is their compliance, risk, and performance management solution that collates ongoing supplier risk management information into a central location. CF Suite uses all of this data to create a 360 degree supplier scorecard for managing risk, performance and development on an ongoing basis.

The great thing about scorecards is that you can select the questionnaires and third-party data you want to include, define the weightings, define the stakeholders who will be scoring the responses that can’t be auto-scored, and get a truly custom 360-degree scorecard on risk, compliance, and/or performance. You can attach associated documents, contracts, supplier onboarding questionnaires, third party assessments, and audits as desired to back up the scorecard, which provides a solid foundation for supplier performance, risk, and compliance management and development plan creation.

Data Analytics

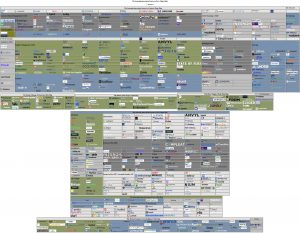

Powered by Qlik, CF Analytics provides out-of-the-box dashboards and reports to help analyze spend, manage contract pipelines and lifecycles, track supplier onboarding workflow and status, and manage ongoing supplier risk . Client organizations can also create their own dashboards and reports as required, or Curtis Fitch can create additional dashboards and reports for the client on implementation. Curtis Fitch has API integrations available as standard for those clients that wish to analyse data in their preferred business tool, like Power BI, or Tableau.

The out-of-the-box dashboards and reports are well designed and take full advantage of the Qlik tool. The process management, contract/supplier status dashboard, and performance management dashboards are especially well thought out and designed. For example, the project management dashboard will show you the status of each sourcing project by stage and task, how many tasks are coming due and overdue, the total value of projects in each stage, and so on. Other process-oriented dashboards for contracts and supplier management are equally well done. For example, the contract management dashboard allows you to filter in by supplier category, or contract grouping and see upcoming milestones in the next 30 days, 60 days, and 90 days as well as overdue milestones.

The spend dashboards include all the standard dashboards you’d expect in a suite, and they are very easy to use with built-in filtering capability to quickly drill down to the precise spend you are interested in. The only down-side is they are OLAP based, and updates are daily. However, they are considering adding support for one or more BoB spend analysis platforms for those that want more advanced analytics capability.

Overall

It’s clear that the Curtis Fitch platform is a mature, well thought out, fleshed out platform for source to contract for indirect and direct services in both the public and private sector and a great solution not only for the global FTSE 100 companies they support, but the mid-market and enterprise market. It’s also very likely to be adopted, a key factor for success, because, as we pointed out in our headline, it’s very consumer friendly. While the UI design might look a bit dated (just like the design of Sourcing Innovation), it was designed that way because it’s extremely usable and, thus, very consumer friendly.

Curtis Fitch have an active roadmap, following development best practices, alongside scoping workshops, where they partner with their clients to ensure new features and benefits are based on user requirements. Many modern applications with flashy UIs, modern hieroglyphs, and text-based conversational interfaces might look cool, but at the end of the day sourcing professionals want to get the job done and don’t want to be blinded by vast swathes of functionality when looking for a specific feature. Procurement professionals want a well-designed, intuitive, guided workflow, a ‘3-clicks and I’m there’ style application that will get the job done efficiently and effectively. This is what CF Suite offers.

Conclusion

While there are some limitations in award analysis (as most users prefer to do that in Excel) and analytics (as it’s built on QlikSense), and not a lot of functionality that is truly unique if you compare it to functionality in the market overall, it is one of the broadest and deepest mid-market+ suites out there and can provide a lot of value to a lot of organizations. In addition, Curtis Fitch also offers consulting and managed auction/RFX services which can be very helpful to an understaffed organization as they can get some staff augmentation / event support while also having full visibility into the process and the ability to take over fully when they are ready. If you’re looking for a tightly integrated, highly useable, easily adopted Source-to-Contract platform with more contract and supplier management ability than you might expect, include CF Suite in the RFP. It’s certainly worth an investigation.